Free Computer Tutorials in pdf, tutoriel & guide de travaux pratiques.

A TOP-LEVEL VIEW OF COMPUTER FUNCTION KEY POINTS

◆ An instruction cycle consists of an instruction fetch, followed by zero or more operand fetches, followed by zero or more operand stores, followed by an interrupt check (if interrupts are enabled).

◆ The major computer system components (processor, main memory, I/O modules) need to be interconnected in order to exchange data and control signals. The most popular means of interconnection is the use of a shared system bus consisting of multiple lines. In contemporary systems, there typ-ically is a hierarchy of buses to improve performance.

◆ Key design elements for buses include arbitration (whether permission to send signals on bus lines is controlled centrally or in a distributed fashion); timing (whether signals on the bus are synchronized to a central clock or are sent asynchronously based on the most recent transmission); and width (number of address lines and number of data lines).

At a top level, a computer consists of CPU (central processing unit), memory, and I/O components, with one or more modules of each type. These components are intercon-nected in some fashion to achieve the basic function of the computer, which is to exe-cute programs.Thus, at a top level, we can describe a computer system by (1) describing the external behavior of each component—that is, the data and control signals that it exchanges with other components; and (2) describing the interconnection structure and the controls required to manage the use of the interconnection structure.

This top-level view of structure and function is important because of its explana-tory power in understanding the nature of a computer. Equally important is its use to understand the increasingly complex issues of performance evaluation. A grasp of the top-level structure and function offers insight into system bottlenecks, alternate path-ways, the magnitude of system failures if a component fails, and the ease of adding per-formance enhancements. In many cases, requirements for greater system power and fail-safe capabilities are being met by changing the design rather than merely increas-ing the speed and reliability of individual components.

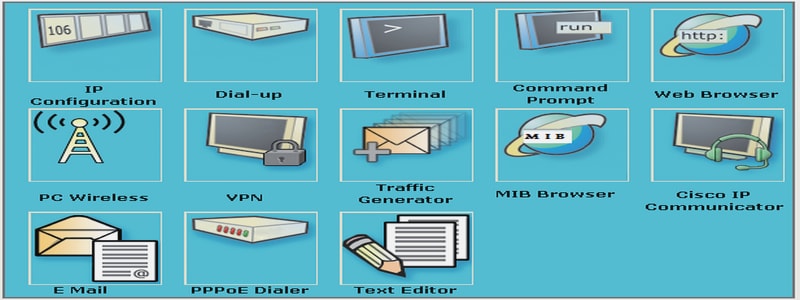

This chapter focuses on the basic structures used for computer component in-terconnection. As background, the chapter begins with a brief examination of the basic components and their interface requirements. Then a functional overview is provided. We are then prepared to examine the use of buses to interconnect system components.

COMPUTER COMPONENTS

As discussed in Chapter 2, virtually all contemporary computer designs are based on concepts developed by John von Neumann at the Institute for Advanced Studies, Princeton. Such a design is referred to as the von Neumann architecture and is based on three key concepts:

- Data and instructions are stored in a single read–write memory.

- The contents of this memory are addressable by location, without regard to the type of data contained there.

- Execution occurs in a sequential fashion (unless explicitly modified) from one instruction to the next.

The reasoning behind these concepts was discussed in Chapter 2 but is worth summarizing here. There is a small set of basic logic components that can be com-bined in various ways to store binary data and to perform arithmetic and logical op-erations on that data. If there is a particular computation to be performed, a configuration of logic components designed specifically for that computation could be constructed. We can think of the process of connecting the various components in the desired configuration as a form of programming. The resulting “program” is in the form of hardware and is termed a hardwired program.

Now consider this alternative. Suppose we construct a general-purpose config-uration of arithmetic and logic functions. This set of hardware will perform various functions on data depending on control signals applied to the hardware. In the orig-inal case of customized hardware, the system accepts data and produces results (Figure 3.1a). With general-purpose hardware, the system accepts data and control signals and produces results. Thus, instead of rewiring the hardware for each new program, the programmer merely needs to supply a new set of control signals.

How shall control signals be supplied? The answer is simple but subtle. The en-tire program is actually a sequence of steps. At each step, some arithmetic or logical

operation is performed on some data. For each step, a new set of control signals is needed. Let us provide a unique code for each possible set of control signals, and let us add to the general-purpose hardware a segment that can accept a code and gen-erate control signals (Figure 3.1b).

Programming is now much easier. Instead of rewiring the hardware for each new program, all we need to do is provide a new sequence of codes. Each code is, in effect, an instruction, and part of the hardware interprets each instruction and gen-erates control signals. To distinguish this new method of programming, a sequence of codes or instructions is called software.

Figure 3.1b indicates two major components of the system: an instruction in-terpreter and a module of general-purpose arithmetic and logic functions. These two constitute the CPU. Several other components are needed to yield a functioning computer. Data and instructions must be put into the system. For this we need some sort of input module. This module contains basic components for accepting data and instructions in some form and converting them into an internal form of signals us-able by the system. A means of reporting results is needed, and this is in the form of an output module. Taken together, these are referred to as I/O components.

One more component is needed. An input device will bring instructions and data in sequentially. But a program is not invariably executed sequentially; it may jump around (e.g., the IAS jump instruction). Similarly, operations on data may re-quire access to more than just one element at a time in a predetermined sequence. Thus, there must be a place to store temporarily both instructions and data. That module is called memory, or main memory to distinguish it from external storage or peripheral devices. Von Neumann pointed out that the same memory could be used to store both instructions and data.

Figure 3.2 illustrates these top-level components and suggests the interactions among them. The CPU exchanges data with memory. For this purpose, it typically makes use of two internal (to the CPU) registers: a memory address register (MAR), which specifies the address in memory for the next read or write, and a memory buffer register (MBR), which contains the data to be written into memory or receives the data read from memory. Similarly, an I/O address register (I/OAR) specifies a particular I/O device. An I/O buffer (I/OBR) register is used for the ex-change of data between an I/O module and the CPU.

A memory module consists of a set of locations, defined by sequentially num-bered addresses. Each location contains a binary number that can be interpreted as either an instruction or data. An I/O module transfers data from external devices to CPU and memory, and vice versa. It contains internal buffers for temporarily hold-ing these data until they can be sent on.

Having looked briefly at these major components, we now turn to an overview of how these components function together to execute programs.

COMPUTER FUNCTION

The basic function performed by a computer is execution of a program, which con-sists of a set of instructions stored in memory. The processor does the actual work by executing instructions specified in the program. This section provides an overview of the key elements of program execution. In its simplest form, instruction processing consists of two steps: The processor reads ( fetches) instructions from memory one at a time and executes each instruction. Program execution consists of repeating the process of instruction fetch and instruction execution. The instruction execution may involve several operations and depends on the nature of the instruction (see, for example, the lower portion of Figure 2.4).

The processing required for a single instruction is called an instruction cycle. Using the simplified two-step description given previously, the instruction cycle is de-picted in Figure 3.3. The two steps are referred to as the fetch cycle and the execute cycle. Program execution halts only if the machine is turned off, some sort of unrecov-erable error occurs, or a program instruction that halts the computer is encountered.

Instruction Fetch and Execute

At the beginning of each instruction cycle, the processor fetches an instruction from memory. In a typical processor, a register called the program counter (PC) holds the address of the instruction to be fetched next. Unless told otherwise, the processor always increments the PC after each instruction fetch so that it will fetch the next in-struction in sequence (i.e., the instruction located at the next higher memory ad-dress). So, for example, consider a computer in which each instruction occupies one 16-bit word of memory. Assume that the program counter is set to location 300. The processor will next fetch the instruction at location 300. On succeeding instruction cycles, it will fetch instructions from locations 301, 302, 303, and so on. This sequence may be altered, as explained presently

The fetched instruction is loaded into a register in the processor known as the instruction register (IR). The instruction contains bits that specify the action the processor is to take. The processor interprets the instruction and performs the re-quired action. In general, these actions fall into four categories:

- Processor-memory: Data may be transferred from processor to memory or from memory to processor.

- Processor-I/O: Data may be transferred to or from a peripheral device by transferring between the processor and an I/O module.

- Data processing: The processor may perform some arithmetic or logic opera-tion on data.

- Control: An instruction may specify that the sequence of execution be altered. For example, the processor may fetch an instruction from location 149, which specifies that the next instruction be from location 182. The processor will re-member this fact by setting the program counter to 182. Thus, on the next fetch cycle, the instruction will be fetched from location 182 rather than 150.

An instruction’s execution may involve a combination of these actions.

Consider a simple example using a hypothetical machine that includes the characteristics listed in Figure 3.4. The processor contains a single data register, called an accumulator (AC). Both instructions and data are 16 bits long. Thus, it is convenient to organize memory using 16-bit words. The instruction format provides 4 bits for the opcode, so that there can be as many as 24 = 16 different opcodes, and up to 212 = 4096 (4K) words of memory can be directly addressed.

Chapter 0 Reader’s Guide 1

0.1 Outline of the Book

0.2 A Roadmap for Readers and Instructors

0.3 Why Study Computer Organization and Architecture

0.4 Internet and Web Resources

PART ONE OVERVIEW

Chapter 1 Introduction

1.1 Organization and Architecture

1.2 Structure and Function

1.3 Key Terms and Review Questions

Chapter 2 Computer Evolution and Performance

2.1 A Brief History of Computers

2.2 Designing for Performance

2.3 The Evolution of the Intel x86 Architecture

2.4 Embedded Systems and the ARM

2.5 Performance Assessment

2.6 Recommended Reading and Web Sites

2.7 Key Terms, Review Questions, and Problems

PART TWO THE COMPUTER SYSTEM

Chapter 3 A Top-Level View of Computer Function and Interconnection

3.1 Computer Components

3.2 Computer Function

3.3 Interconnection Structures

3.4 Bus Interconnection

3.5 PCI

3.6 Recommended Reading and Web Sites

3.7 Key Terms, Review Questions, and Problems

Appendix 3A Timing Diagrams

Chapter 4 Cache Memory

4.1 Computer Memory System Overview

4.2 Cache Memory Principles

4.3 Elements of Cache Design

4.4 Pentium 4 Cache Organization

4.5 ARM Cache Organization

4.6 Recommended Reading

4.7 Key Terms, Review Questions, and Problems

Chapter 5 Internal Memory Technology

5.1 Semiconductor Main Memory

5.2 Error Correction

5.3 Advanced DRAM Organization

5.4 Recommended Reading and Web Sites

5.5 Key Terms, Review Questions, and Problems

Chapter 6 External Memory

6.1 Magnetic Disk

6.2 RAID

6.3 Optical Memory

6.4 Magnetic Tape

6.5 Recommended Reading and Web Sites

6.6 Key Terms, Review Questions, and Problems

Chapter 7 Input/Output

7.1 External Devices

7.2 I/O Modules

7.3 Programmed I/O

7.4 Interrupt-Driven I/O

7.5 Direct Memory Access

7.6 I/O Channels and Processors

7.7 The External Interface: FireWire and Infiniband

7.8 Recommended Reading and Web Sites

7.9 Key Terms, Review Questions, and Problems

Chapter 8 Operating System Support

8.1 Operating System Overview

8.2 Scheduling

8.3 Memory Management

8.4 Pentium Memory Management

8.5 ARM Memory Management

8.6 Recommended Reading and Web Sites

8.7 Key Terms, Review Questions, and Problems

PART THREE THE CENTRAL PROCESSING UNIT

Chapter 9 Computer Arithmetic

9.1 The Arithmetic and Logic Unit (ALU)

9.2 Integer Representation

9.3 Integer Arithmetic

9.4 Floating-Point Representation

9.5 Floating-Point Arithmetic

9.6 Recommended Reading and Web Sites

9.7 Key Terms, Review Questions, and Problems

Chapter 10 Instruction Sets: Characteristics and Functions

10.1 Machine Instruction Characteristics

10.2 Types of Operands

10.3 Intel x86 and ARM Data Types

10.4 Types of Operations

10.5 Intel x86 and ARM Operation Types

10.6 Recommended Reading

10.7 Key Terms, Review Questions, and Problems

Chapter 11 Instruction Sets: Addressing Modes and Formats

11.1 Addressing

11.2 x86 and ARM Addressing Modes

11.3 Instruction Formats

11.4 x86 and ARM Instruction Formats

11.5 Assembly Language

11.6 Recommended Reading

11.7 Key Terms, Review Questions, and Problems

Chapter 12 Processor Structure and Function

12.1 Processor Organization

12.2 Register Organization

12.3 The Instruction Cycle

12.4 Instruction Pipelining

12.5 The x86 Processor Family

12.6 The ARM Processor

12.7 Recommended Reading

12.8 Key Terms, Review Questions, and Problems

Chapter 13 Reduced Instruction Set Computers (RISCs)

13.1 Instruction Execution Characteristics

13.2 The Use of a Large Register File

13.3 Compiler-Based Register Optimization

13.4 Reduced Instruction Set Architecture

13.5 RISC Pipelining

13.6 MIPS R4000

13.7 SPARC

13.8 The RISC versus CISC Controversy

13.9 Recommended Reading

13.10 Key Terms, Review Questions, and Problems

Chapter 14 Instruction-Level Parallelism and Superscalar Processors

14.1 Overview

14.2 Design Issues

14.3 Pentium 4

14.4 ARM Cortex-A8

14.5 Recommended Reading

14.6 Key Terms, Review Questions, and Problems

Chapter 15 Control Unit Operation

15.1 Micro-operations

15.2 Control of the Processor

15.3 Hardwired Implementation

15.4 Recommended Reading

15.5 Key Terms, Review Questions, and Problems

Chapter 16 Microprogrammed Control

16.1 Basic Concepts

16.2 Microinstruction Sequencing

16.3 Microinstruction Execution

16.4 TI 8800

16.5 Recommended Reading

16.6 Key Terms, Review Questions, and Problems

PART FIVE PARALLEL ORGANIZATION

Chapter 17 Parallel Processing

17.1 The Use of Multiple Processors

17.2 Symmetric Multiprocessors

17.3 Cache Coherence and the MESI Protocol

17.4 Multithreading and Chip Multiprocessors

17.5 Clusters

17.6 Nonuniform Memory Access Computers

17.7 Vector Computation

17.8 Recommended Reading and Web Sites

17.9 Key Terms, Review Questions, and Problems

Chapter 18 Multicore Computers

18.1 HardwarePerformance Issues

18.2 Software Performance Issues

18.3 Multicore Organization

18.4 Intel x86 Multicore Organization

18.5 ARM11 MPCore

18.6 Recommended Reading and Web Sites

18.7 Key Terms, Review Questions, and Problems