In recent works of fiction such as the movies “Avatar” and “Surrogates”, a system allows a user, using only his thoughts, to experience and interact with the world through a physical copy (e.g. a robot) as if it was her/his own experience. Although state-of-the-art technologies do not allow to do this, recent technological advancements lay the initial bricks of such a system . In order to build a “surrogate” system, one would need to combine these technologies in an efficient manner.

However, traditionally, reproducing the task at the will of the operator on a humanoid robot or an avatar is done by capturing his/her movements. In this thesis, we wish to move away from gesture and movement, which control a task, toward the interpretation of the user’s thoughts. At first we plan to achieve only simple control tasks that follow closely telepresence schemes between an operator and a humanoid robot. The demonstrators we build and present in this thesis progressively move away from this simple task to allow complex interactions between the user and its environment through the embodied device.

This work is conducted in the framework of the European integrated project VERE (Virtual Embodiment and Robotics re-Embodiment). Our goal in this project is to devise a software architecture that integrates a set of control and sensory feedback strategies to physically re-embody a human user into a humanoid robot surrogate or a virtual avatar using his/her own thoughts. The demonstrator we aim to achieve can be described as the following: a user is equipped with a brain-computer interface (BCI) that monitors brain waves activities from which we want to extract intentions and translate them into robotic commands. Sensory feedback should be delivered to the user so that s/he can embody the controlled device: virtual or real.

And feedback devices that compose the embodiment station built within the VERE project by another partner (University of Pisa). The intentions of the operator are guessed from his/her thinking and are then transformed into robotic tasks. The humanoid robot then executes these tasks and its sensors outputs are interpreted and properly reproduced for the operator in “close-loop” fashion. The research challenges involved within the realization of such a system are numerous and we now explicit them.

1. Complexity in fully controlling the surrogate’s motion

With technologies currently available, we are not able to extract a precise (motor) intention from the user’s brain activity. Hence, our approach requires an important level of shared autonomy, that is the capacity for the robot to make decision on its own while respecting the wishes of the user, and also requires the robot to be able to understand its environment. This later statement involves many things: the robot should know– or learn–how its environment is built, where it is located within this environment and which tasks it can perform. This is made necessary by two equally important aspects. First, the robot needs to understand its environment in order to interact with it in a safe and efficient manner. Second, it also has to be able to communicate its knowledge to the BCI so that it is used to better understand the user’s intentions and provide meaningful feedback. In the case of a virtual avatar, this is easier to achieve as the environment is simulated.

2. Minimal motion-based control/coupling

The technologies currently available to recover the user’s intentions from his/her brain are far from achieving the kind of understanding that we can observe in fictional works. They are actually very limited with regards to the number of recognizable intentions, the available bit rate and the accuracy of the recognition. Therefore, we have to devise a number of strategies to obtain the user’s intention through the B-BCI (Body-Brain Computer Interface). Furthermore, these intentions should be mapped into surrogate (robotic) actions.

3. Full synergy and beyond telepresence transparency

The notion of embodiment, central within the VERE project, goes beyond the classical approaches of teleoperation and telepresence. In teleoperation and telepresence, a user controls a remote device and receives feedback (e.g. visual feedback or haptic cues) about the task s/he is executing through the device. In telepresence, this allows the user to feel present at the scene where s/he is executing a task through the device. In embodiment, the user should not only feel present at the remote location but s/he should feel as if the device’s body was his/her own. This requires the introduction of new sensory feedback strategies.

These issues are all dependent upon constantly evolving scientific fields that also drive our overall progress. They cover a large number of research fields and go beyond the scope of a single thesis. We however demonstrate significant progress on each of these issues.

Brain-computer interfaces (BCI), or brain-machine interfaces (BMI), allow bypassing the usual means of communication channels between a human and a computer or a machine such as hand or voice input devices (e.g. keyboard, mouse, joysticks, voice command microphones, etc.). Instead, they allow the users to communicate their intentions to the computer by processing the source of the intentions: the brain activity. In return, the user is able to control different application (software or device) systems connected to the BCI. Combining this technology with a humanoid robot, we envision the possibility of incarnating one’s body into a robotic avatar. Such a goal is pursued by the VERE project in which frame the present work takes place. In many ways the problem at hands is reminiscent of telepresence technology. However, the specificities that are brought by the use of a brain-computer interface goes beyond telepresence aims and objectives while at the same time they backtrack substantially the performances. Indeed, in the current state of technology, brain-computer interfaces differ from traditional control interfaces [3], as they have a low information rate, high input lag and erroneous inputs. While the two terms–BCI and BMI–hold the same signification, in this thesis, we prefer the later when referring to the common idea of a BCI while using the second for BCI within the context of robot control.

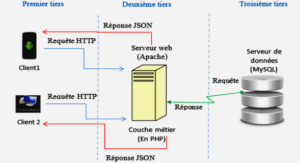

In recent years, several frameworks such as OpenViBE [4], BCI2000 [5] or TOBI hBCI [6] have introduced a similar three-layer model to produce BCI application , The signal acquisition layer monitors the physiological signals from the brain through one or several physical devices and digitizes these signals to pass them to the signalprocessing unit. The signal-processing unit is in charge of extracting features —e.g. power spectrum, signal energy— from the raw signals, and pass them onto a classification algorithm to distinguish the patterns of intentions of the user. Finally, these decoded intentions are passed to the user application. In this chapter we present each layer independently. We first present the different acquisition technologies that have been used in the BCI context, we discuss their benefits and limitations and we justify the choice of electroencephalography (EEG) in most of our works. The second section focuses on the different paradigms that can be used in EEG-based BCI. Finally, we review previous works that have used BCI as a control input, focusing on previous works in the robotics domain.

The “reading” of one’s brain activity signals can be done by means of different technologies. However, within the context of brain-computer interface only those that allow measuring the brain activity in real-time or close to real-time are considered. In this section, we present some invasive solutions that have been used and BCI and then we focus on the three most used non-invasive technologies in BCI: functional near infrared spectroscopy (fNIRS), functional magnetic resonance imaging (fMRI) and electroencephalography (EEG). The practical pros of these technologies, e.g. the quality of the recorded signals or their spatial localization accuracy — how precisely one can tell from where in the brain a signal originates, are discussed together with other practical issues such as cost and more importantly the intrusiveness level. Finally, we explain our choice to settle on EEG for most of the applications developed within this work.

Introduction |