In this chapter, we explore probabilistic parametric learning approaches. A model with a finite number of parameters is used to predict an output y ∈ Y given an input x ∈ X . Probabilistic machine learning methods rely on probability theory in the procedures of training and inference. Uncertainty in the prediction can be measured in terms of probability.

We investigate generative and discriminative approaches, usually opposed to each other in the context of probabilistic learning for classification tasks. The former aims at estimating joint distribution and uses Bayes rule, the latter aims at estimating conditional distribution and performs direct inference. Both a joint and a conditional distributions can be associated with a graphical structure, which allows to visualize dependencies and to represent the distributions by a product of local functions.

In this chapter, we consider and compare supervised generative and discriminative learning methods such as the naive Bayes and the logistic regression, as well as approaches that take more elaborate sequential dependencies into account, namely hidden Markov models and maximum entropy Markov models.

Discriminative and Generative Learning

In many applications of machine learning, the goal is to assign to an observation x ∈ X a label y ∈ Y. The estimation is performed by a parameterized decision function. The parameters are learnt from a set of training input data X = {x1, . . . , xN } and a corresponding set of labels Y = {y1, . . . , yN }. Generative and discriminative learning approaches widely used nowadays are usually opposed to each other; in (Jebara, 2004), for instance, they are even presented as two different schools of thought.

At first glance, what can be better than creating a model that is complete, i.e., a generative model? The most intuitive explanation why generative classifiers are excessive and can be outperformed by less elaborated models has been provided by Vapnik (1998): “one should solve the problem directly and never solve a more general problem as an intermediate step”. The generative models provide a generator of data but if the goal is classification, modeling the data generator is an intermediate and often more complex problem.

Generative models are known to obtain the correct posterior if the training data are drawn according to the true distribution. We know that, in real-world applications, the true distribution is unknown, and it is therefore unnecessary to construct the full underlying distribution if p(y|x), the distribution we need, can be modeled directly.

Discriminative models

The discriminative approach avoids modeling the underlying distribution and is aimed at directly mapping the observations into labels. Discriminative models design directly p(y|x). Among the efficient probabilistic discriminative models, one can mention logistic regression and its generalizations, such as maximum entropy Markov models and conditional random fields. The discriminative learning paradigm does not include marginal probability neither of labels, nor of observations . Discriminative models, in their turn, have some disadvantages. Discriminative approach is focused on construction of classification boundaries as it is mentioned in (Tu, 2007), and to construct them one needs both positive and negative examples, whereas negative examples are not always available.

Given a training data set, a parametric family of probability models can either fit a joint likelihood p(y, x) and result in a generative classifier, or conditional likelihood p(y|x), and result in a conditional classifier. Such generative and conditional classifiers are called in (Ng and Jordan, 2002) generative-discriminative pairs. To provide deeper insights in generative and discriminative learning, let us consider two such pairs, the logistic regression and naive Bayes models on the one hand and hidden Markov model and maximum entropy Markov model on the other hand.

Logistic Regression and Naive Bayes for Classification Tasks

In this section, we investigate a generative-discriminative pair of classification methods widely used for supervised classification, logistic regression and naive Bayes. We consider the distributions modeled by logistic regression and by naive Bayes. We will see that the logistic regression directly models the conditional probability p(y|x) and that the estimates p(x|y) and p(y) designed by the naive Bayes can be used to predict a class given an observation. We describe the maximum likelihood approaches used to estimate the parameters in these models, and we discuss whether the logistic regression and the naive Bayes are appropriate for all kinds of applications.

Logistic Regression

The logistic regression model aims to predict the posterior probability of a class y, y ∈ {1, . . . , K} via linear functions of an observation x, x ∈ R d . The model is based on the assumption that the conditional probability of a class, given an observation, is proportional to exp(fk(x)), with fk(x) = θ T k x, where k is a class k ∈ K, and θk is a vector of parameters associated with the class k.

Graphical Models

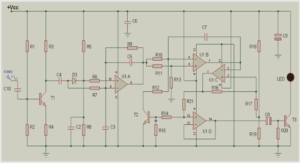

Graphical models are a natural formalism to describe a structure and dependencies in a probabilistic model, since statistical models can be formulated in terms of graphs. The research on graphical models is very active nowadays, see, e.g., (Lauritzen, 1996, Jordan et al., 1999, Jordan, 1999, Wainwright and Jordan, 2003). To provide some intuition, let us consider the logistic regression and the naive Bayes as graphical models. Logistic regression and naive Bayes models can be represented graphically, as it is done on the Figure 2.1: x = [x1, x2, x3] is a vector of observations, and y its corresponding label. According to the standard notations, each node is a variable. Shadowed nodes are considered to be observed, and the transparent ones are hidden.

1 Introduction |