Advanced Analytics Platform

• Also, how does the orchestration layer guide the conversation layer to make the conversation more relevant and insightful without slowing it down? This section will continue to make use of the sports television analogy and drive it toward characteristics of the architecture and supporting infrastructure of analytics. The first task at hand is to identify a situation or an entity. An analytics system working in conversation mode must use simple selection criteria in the form of counters and filters to rapidly reduce the search space and focus on its identification within a window of time. A television commentator is able to follow the ball, identify the players in the focus area, and differentiate between good and bad performances. Similarly, a real-time campaign system filters all the available data to identify a customer who meets certain campaign-dependent criteria. A customer who has been conducting online searches for smartphones is a good candidate for a smartphone product offer. A number of real-time parameters, such as customer location and recent web searches, would play major roles. The second task is to assemble all associated facts. At this point, the sports television director may offer data previously collected about the players to the commentator, who can combine the data with his or her personal experience to Figure 5.1: Mapping of sports television to Big Data Analytics architecture 54 • Big Data Analytics narrate a story. In the Big Data Analytics architecture, the moment a customer walks into a retail store or connects with a call center, the orchestrator uses identifying information to pull all the relevant information about this customer. The third task is to score and prioritize alternatives to establish the focus area. It always fascinates me in U.S. football when half a dozen players wrestle with each other to stop the ball. The commentator has the tough task of watching the ball and the significant players while ignoring the rest. Similarly, in the Big Data Analytics architecture, we may be dealing with hundreds of predictive models. In a relatively very short time (less than one second in most cases), the analysis system must score these models on available data to compare the most important alternative and pass it on for further action. In online advertising, the bidding process may conclude in less than 100 milliseconds. The Demand Side Platform (DSP) must view a number of competing advertisement candidates and select the one that is most likely to be clicked by the customer. The last task is to package all the real-time evidence. The information is turned over to the orchestration layer for storage and future discovery. The conversation layer can now focus on the next task, while the orchestration layer annotates the data and sends it to the discovery layer. A number of software products are emerging to provide technical capabilities for real-time identification, data synthesis, and scoring commonly referred to as stream computing. Stream computing is a new paradigm. In “traditional” processing, one can think of running analytic queries against historical data—for instance, calculating the distance walked last month from a data set of subscribers who transmit GPS location data while walking. With stream computing, one can identify and count, as well as filter and associate, events from a number of unrelated streams to score alternatives against previously specified predictive models. IBM’s InfoSphere Streams has been successfully applied to the conversation layer for low-latency, real-time analytics.

Orchestration and Synthesis Using Analytics Engines

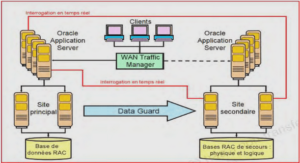

Nowadays, it is impossible to imagine a live television program without orchestration. A highly productive team has replaced what used to be a “sportscaster” in the early days of sports coverage. A typical television production involves a number of cameras offering a variety of angles to the players, in addition to stock footage, commentators, commercial breaks, and more. The director provides the orchestration, assisted by a team of people who organize the resources and facilitate the live event. Chapter 5: Advanced Analytics Platform • 55 Similarly, Big Data Analytics is increasingly involving vast amount of components and options. The stakes are becoming increasingly higher. For example, as we discussed in Chapter 3, as new products are launched, there is a need for orchestrated campaigns, where product information is disseminated using a variety of media and customer sentiments are carefully monitored and shaped to make the launches successful. Let us use the Intelligent Advisor scenario that was described in Section 3.6 and depicted in Figure 3.3. We repeat the steps here so we can analyze the process that the Retailer and Telco use to engage the customer: Step 1: Lisa registers with the Retailer, gives permission to the Retailer and the Telco to use consumer information to track activities. Step 2: Lisa follows a friend’s post on Facebook and clicks the Like button on a camera she likes. Step 3: The Intelligent Advisor platform processes Lisa’s activity for relevant actions using Retailer and Telco information. Figure 5.2: Telco/Retailer orchestration in Intelligent Advisor 56 • Big Data Analytics Figure 5.3: Orchestration-driven identity resolution Step 4: Lisa receives a message with an offer reminding her to stop by if she is in the area. Step 5: Lisa receives a promotion code for an offer while passing by the store. Step 6: Lisa uses the promotion code to purchase the offer at a PoS device. Figure 5.2 shows the behind-the-scenes orchestration steps. As the system interacts with Lisa, it uses an increasing amount of opt-in information to make specific offers to her. By the time we get to Step 5, we are dealing with a specific store location and related customer profile information stored in the Retailer’s customer database. These orchestrations involve a number of architectural components, possibly represented by a number of products, each performing a role. One set of components is busy matching customers to known data and finding out more data. Another set of components is performing deep reflection to find new patterns. A management information system keeps track of the overall Key Performance Indicators (KPIs) and data governance. Figure 5.3 depicts the orchestration model. The observation space and its interactions are in real-time. IBM’s InfoSphere Streams provides capabilities suited for real-time pattern matching and interaction for this space. The deep Chapter 5: Advanced Analytics Platform • 57 reflection requires predictive modeling or unstructured data correlation capabilities and can best be performed using SPSS or Big Insights. Directed attention may be provided using a set of conversation tools, such as Unica® or smartphone apps (e.g., Worklight™). Management reporting and dashboard may be provided using Cognos. Depending on the level of sophistication and latency, there are several components for the box in the middle, which decides on the orchestration focus, directs various components, and choreographs their participation for a specific cause, such as getting Lisa to buy something at the store. Entity Resolution Using a variety of data sources, the identity of the customer can be resolved by IBM’s Entity Analytics®. During the course of the entity resolution, we may use offers and promotion codes to encourage customer participation, both to resolve identity as well as to obtain permission to make offers (as in Steps 4 and 5 above). Model Management IBM SPSS provides collaboration and deployment services, which are able to keep track of the performance of a set of models. Depending on the criteria, the models can be applied to different parts of the population and switched, for example, by using the champion/challenger approach. Command Center A product manager may set up a monitoring function to monitor progress for a new product launch or promotional campaign. Monitoring may include product sales, competitive activities, and social media feedback. Velocity from Vivisimo, a recent IBM acquisition, is capable of providing a mechanism for federated access to a variety of source data associated with a product or customer. A dashboard provides access to a set of users monitoring the progress. Alternatively, the information can be packaged in an XML message and shipped to other organizations or automated agents. Analytics Engine An analytics engine may provide a mechanism for accumulating all the customer profiles, insights, and matches as well as capabilities for analyzing this data using predictive modeling and reporting tools. This analytics engine becomes the central hub for all information flows and hence must be able to deal with high volumes of data. IBM’s Netezza product has been successfully used as an analytics engine in Big Data architectures.