Télécharger le fichier original (Mémoire de fin d’études)

Local methods in image classification

Before the supremacy of deep neural networks, state-of-the-art image classification techniques relied on the image’s separation into patches [Perronnin et al., 2010, Wang et al., 2010]. An image x with H × W pixels xij, i < H, j < W is decomposed into patches p with Q2 pixels pi,j, i, j < Q. It allows reducing the dimensionality of the problem from dimension 3HW (∼ 105 typically ) to 3Q2 (∼ 103 typically). One can further reduce the dimension to ∼ 102 computing Scale-invariant feature transform (SIFT, Lowe [2004]) or Histograms of Oriented Gradients (HOG, Dalal and Triggs [2005]) descriptors D(p) of theses patches p. This descriptor D(p ) is then encoded into Φ( D (p)), using, for example, a finite-dimensional approximation of the Fisher kernel [Perronnin et al., 2010]. Finally, Perronnin et al. [2010], Wang et al. [2010] average the encoding Φ(D(p)) over image patches p. They apply a linear operator W for the classification. The average over spatial indices and the linear operations over the encoding Φ(D(p)) dimension can be inverted. The classification decision F (x) is a hence sum over the patches p of the image x F (x) = X W Φ(D(p)) . p∈x

Contrarily to physics, where the distances are absolute, and the size of the neighborhood limited to a few Angstroms (A˙), the size of the patch in an image is related to the resolution of the image. A 8 × 8 image patch of a 32 × 32 CIFAR-10 and A 8 × 8 image patch of a 256 × 256 ImageNet image contain qualitatively different information. The size of the patch in an image corresponds hence to a certain scale. Patch separation is implicitly a form of scale separation with a scale corresponding to the ratio between the patch size and the image size.

Convolutional neural networks

Convolutional neural networks (CNNs) show impressive results on image classification [Krizhevsky et al., 2012, He et al., 2016] or energy regression in physics [Schütt et al., 2018]. Contrarily to local methods, CNNs process the input as a whole. They do not separate the image into patches nor a molecule into atomic neighborhoods. They yield higher accuracy than methods based on separation in image classification and energy regression in physics.

On the one hand, removing local separation increases the input dimension: the function is harder to approximate. On the other hand, enforcing a local separation of the input can discard some relevant information for the classification or regression task. Classifying an image using the whole image instead image’s patches allows analyzing global shape shapes that are not contained in patches. LeCun et al. [2015] propose the following explanation of the success of CNNs in image classification: » The first layer [of the convolutional network] represents the presence or absence of edges […] in the image. The second layer typically detects motifs […]. The third layer may assemble motifs into […] parts of familiar objects, and subsequent layers would detect objects as combinations of these parts. » A similar interpretation is given by Kriegeskorte [2015]: « the [deep convolutional] network acquires complex knowledge about the kinds of shapes associated with each category. […] High-level units appear to learn representations of shapes occurring in natural images. »

The energy of an atomic system results from complex many-body interactions. Moreover, methods based on short-range separation only account for interaction in the range of 0 to 5A˙ typically. One can hypothesize that they can not capture long-range interactions such as Van-der-Waals interactions ranging up to 15A˙. The SchNet convolutional neural network introduced by Schütt et al. [2018] can theoretically model interactions up to a range of 30A˙.

Local methods’ efficiency for image classification and energy regres-sion

In this dissertation, we study image classification and energy regression techniques relying on local separation. What are the benefits of using local separation for the interpretability of the predictions? Do the local methods performs significantly worse than non-local methods on image classification and energy regression? If yes, how can we capture non-local components of the function we are trying to approximate? If no, what does it tells us about the underlying regularity properties of the supervised learning problem?

Image classification and energy regression in physics are two high-dimensional supervised learning problems. These two problems share several similarities that make our study all the more interesting :

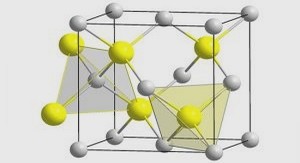

• Atomic neighborhoods in atomic systems can be seen as the equivalent of patches in images.

• Invariance properties have driven the design of descriptors of atomic neighborhood or image patches: rotation and translation invariance for atomic neighborhoods, scale, light-ening, and deformation invariance for image patch patterns.

• The dimension of image patches and atomic neighborhoods descriptors presented in the literature is ∼ 102, which is significantly lower than the initial dimension but is still not a low dimension, i.e., a dimension ≤ 10.

• These two problems are multi-scale problems. Energy results from interactions at dif-ferent scales, e.g., ionic and covalent bonds at short range, Van-der-Waals interactions at the mesoscale, and long-range Coulomb interactions. One can classify an image us-ing texture information at a small scale, pattern information at a larger scale, or shape information at the image scale.

These two problems also have notable differences that make the study complementary:

• One problem is about regression, the other is about classification.

• The atomic positions in physics are in the continuous 3D space. Natural images are sampled on a finite grid ranging from 32 × 32 to 1024 × 1024 pixels typically.

• In physics, distances are absolute: the distance between an atom and its nearest neighbor is in the order of 1A˙. In image classification, the number of pixels that separate two components (e.g., two edges) of an object in the image depends upon the sampling grid.

• In terms of performance, kernel methods are on par with CNNs in energy regression in physics. In image classification, CNNs are far above kernel methods.

Alongside the release of open-source Python implementation of the presented techniques1, the main contributions of this thesis are the following. In the field of energy regression in physics:

• The Solid Harmonic Scattering Transform [Eickenberg et al., 2017] is an atomic environ-ment descriptor relying on scale separation. It is implemented as a multi-scale convolu-tional neural network involving a cascade of wavelet transforms. We study this descriptor comparatively with existing local descriptors. We focus on two different energy regres-sion benchmarks. One is about small organic molecules energy regression. The other is about long-range energy regression in graphite solid. Results show that local descriptors are very efficient to regress energies in these two cases.

• We present a method to regress the vibrational entropy in atomic systems, a free energy component. We study comparatively the Solid Harmonic Scattering transform and a local descriptor called Angular Fourier Series (AFS) [Bartók et al., 2013]. Results show a significantly better predictive power of the local AFS descriptor. Moreover, the presented regression model trained on small systems can extrapolate to very large atomic systems.

In the field of image classification:

• We present a structured convolutional neural network architecture that classifies images using small patches. Performances are comparable with BagNet [Brendel and Bethge, 2019], a state-of-the-art CNN that relies on patch-separation.

• We demonstrate that one can classify images with a non-trivial accuracy using K-nearest-neighbors computations between raw image patches solely. The presented technique significantly outperforms existing non-learned visual representations such as Scattering Transform [Bruna and Mallat, 2013] on CIFAR-10 and ImageNet databases with a linear classifier.

We end this dissertation with an opening on a different topic. Algorithms developed for supervised learning techniques have been used recently in the field of artistic creation. For example, image classification algorithms can be used to perform artistic style transfer on images [Gatys et al., 2015]. Image generation algorithms were used to generate « artistic » images. Some of these generated images are sold on the art market and shown during a public exhibition in the Centre Pompidou in Paris. These phenomena raise new questions about the notions of creation and creativity. We study this notion of creativity from the human-machine interaction perspective. The contributions of this thesis regarding this topic are the following:

• We propose a new form of human-machine interaction consisting of interactive rounds of creation between artists and an algorithm on a canvas. Alongside fostering creativity, it is a case-study of painter-algorithm interactions on a canvas.

• We present interactive painting processes in which a painter and various neural style transfer algorithms interact on a real canvas. We study and characterize the influence of algorithms’ outputs on the final canvas. This allows describing the creative agency of the algorithm in our interactive painting experiments.

In the following of this introduction, we review image classification and energy regression techniques focusing on local methods. Then we present the contributions of this dissertation.

Image classification

Image classification consists in assigning to an input image one class of a given set of classes. This set of classes can be a digit from 0 to 9 for handwritten digit recognition or a set of object classes (e.g., car, truck, table …). The publication of large annotated image databases has fostered the development of image classification techniques. It began in the 90s with the MNIST handwritten digit image database [LeCun et al., 1990, 1998]. In 2004, the Caltech101 database [Fei-Fei et al., 2004] containing 9,146 images divided into 101 object classes was the first large database of objects. It was followed in 2005 by the PascalVOC image databases and challenges published every year between 2005 and 2012, containing a few thousand images divided into 19 object classes. A major shift was the publication of the ImageNet database [Russakovsky et al., 2015] in 2010, with the corresponding ImageNet Large Scale Visual Recognition Challenge (ILSVRC) held every year between 2010 and 2017. Major improvements in image classification, such as AlexNet [Krizhevsky et al., 2012], VGG [Simonyan and Zisserman, 2014], and ResNet [He et al., 2016] were presented on the occasion of this competition.

Patch-based image classification

Patch decomposition is a standard in image processing. The JPEG standard for image com-pression [Wallace, 1992] relies on the decomposition of the image into 8 × 8 patches. Early visual texture synthesis models [Efros and Leung, 1999] and image inpainting methods [Cri-minisi et al., 2004] are based on patch decomposition. SIFT descriptors of patches [Lowe, 2004] were at the core of image retrieval, image stitching, and 3D modeling. These descriptors are invariant to translations, rotations, and scaling transformations in the image domain and robust to moderate perspective transformations and illumination variations.

Before the supremacy of deep networks, state-of-the-art image classification methods were using patch descriptors. The ISLRVC 2010 challenge was won with 71.8% top-5 accuracy using non-linear coding [Wang et al., 2010] upon SIFT and Local Binary Patterns [He and Wang, 1990] patch descriptors, followed by a linear SVM classifier. Sánchez et al. [2013] have won the ISLRVC 2011 challenge with 74.3% top-5 accuracy using patches descriptors encoded with Fisher Vectors (FV) [Perronnin et al., 2010]. This classification pipeline relies on 24 × 24 patches extraction followed by a SIFT and Local Color Statistics (LCS) [Ah-Pine et al., 2008] encoding. These SIFT and LCS descriptors are encoded with a finite-dimensional approximation of the Fisher Kernel and a normalization step. Finally, a linear SVM classifier is applied. Perronnin et al. [2010] justify the use of patches as « a standard approach to describe an image for classification. » It is not a hypothesis made on the classification function on purpose.

Krizhevsky et al. [2012] have won the ISLRVC 2012 challenge with ∼ 85.1% top-5 accuracy using a convolutional neural network (CNN) [LeCun et al., 1989] called AlexNet. This method differs from the previous image classification techniques like Fisher Vectors as it does not rely on patch separation. It processes the input image as a whole.

Neural networks CNNs are a particular type of neural network. From a formal point of view, a neural network architecture is a class of parametric functions {Fθ, θ ∈ RP } where P is the number of parameters. A neural network is a parametric function Fθ : x ∈ Rd 7→F (x). The output F (x) is computed with a succession of linear and non-linear operations: Fθ(x) = WLρ (WL−1 (. . . ρ (W0x))) (1.3) where Wl ∈ Rhl×hl+1 are linear operators, called the weights of the neural network.

The following hyper-parameters define a neural network architecture:

• L: the depth of the neural networks.

• ρ: the non-linear function.

• hl : the width of the lth layer of the neural network. hL+1 is the dimension of the output of the neural network.

A neural network architecture defines a fixed class of parametric functions, parametrized by θ = {W0,…,WL}.

Convolutional neural networks In a standard neural network, an image x with C channels and H × W pixels is considered as a vector of RC×H×W . The first linear operator W0 maps RC×H×W to Rh1 . It is called a « fully-connected » linear operator since each coordinate of W0x depends upon all the coordinates of the input x.

Convolutional neural networks (CNNs) are a particular class of neural network architec-ture. In CNNs, « fully-connected » linear operators are replaced with discrete convolution oper-ators (see Appendix for an introduction to discrete convolutions). The hidden size hl of the fully-connected operator is replaced by the number of channels Cl and spatial size Sl of the convolutional operator Wl. In addition to convolutions, a pooling operator P (local average pooling, max-pooling) allows reducing the layers’ spatial size progressively. An example of CNN architecture is shown in Figure 1.1.

Receptive field in CNN The CNN’s receptive field at a layer l is the region’s size in the input image upon which depend a pixel at the layer l. For example, a single convolution operator’s receptive field is equal to the spatial size S of the convolutional operator W0. The receptive field of a cascade of two convolution operators of sizes S1 and S2 is equal to S1 +S2 −1. In CNN, non-linear operations are usually pointwise, and they do not change the receptive field. On the contrary, pooling operations increase the receptive field. In usual CNNs such as AlexNet [Krizhevsky et al., 2012], VGG [Simonyan and Zisserman, 2014], and ResNet [He et al., 2016], the receptive field of the last convolutional layer is equal to the whole image size.

Figure 1.1: The architecture of the AlexNet CNN [Krizhevsky et al., 2012]. Convolution operations are represented by an arrow with CONV, the spatial size of the convolution, the stride of the convolution, and the number of convolutional kernels (i.e., filters). Convolutions are followed by a ReLU non-linearity which is not represented in the figure. Max-pooling operations are represented by an arrow with Max POOL, the spatial size of the region, and the stride. Fully-connected operators linear operators are represented by an arrow with FC and are followed by a ReLU non-linearity.

Patch-based convolutional neural networks

BagNets [Brendel and Bethge, 2019] have shown that an image can be classified as a bag-of-patches with a competitive classification accuracy ( 87.5% top5 accuracy on ImageNet). A BagNet is a simple variant of the ResNet-50 architecture [He et al., 2016]. Replacing most of the ResNet’s 3×3 convolutions by 1×1 convolutions, the receptive field of the last convolutional layer is limited to Q × Q pixels, with Q ∈ {9, 17, 33}. With a global average pooling before the linear classification, this CNN’s classification decision is a sum of classification decisions over Q × Q image patches. It demonstrates that close to state-of-the-art performance can be obtained with a patch separation hypothesis on the classification function.

More recently, Dosovitskiy et al. [2021] replaced the usual convolutional architecture with a Transformer architecture for image classification. This architecture was initially developed by Vaswani et al. [2017] for natural language processing tasks. In this technique, image patches play the role of words in a sentence. With a few adaptations from text to image, the accuracy obtained is 88.4% top1 (top5 accuracy not mentioned) at a fraction of the computational cost of CNNs that have similar performances. This technique still uses positional embedding to encode the patch position, but an ablation study shows that removing this positional information results in an accuracy drop of about 4%. Without this positional embedding, the classification decision does not consider the spatial ordering of the patches. This architecture treats the image as a « bag-of-patches. »

These two results show that even in the framework of convolutional neural networks, local methods based on image patches can obtain very good accuracy. They encourage the presented study of local methods in the context of image classification.

Energy regression in physics

Potential energy surface

Energy regression in physics consists in fitting a deterministic function. This function, the potential energy surface (PES), maps the atomic positions to the energy of a set of atoms. The Born-Oppenheimer approximation in quantum mechanics guarantees the existence of this function. The potential energy of a system is the lowest eigenvalue of an eigenvalue problem in a functional space. Functions in this functional space map R3Ne to C, where Ne is the number of electrons of the system, usually greater than the number of atoms. The number of basis functions needed to represent a function in this functional space grows exponentially with the dimension 3Ne. There is hence another form of curse of dimensionality in this problem. Since one can not represent these functions numerically, one can not solve the eigenvalue problem. One can thus not access the actual value of this function.

Electronic structure methods such as Hartree-Fock [Hartree, 1928, Fock, 1930] or Density Functional Theory [Hohenberg and Kohn, 1964, Kohn and Sham, 1965] give access to approx-imate values of this function. However, they require solving a complex optimization problem whose computational cost scales like O(N2 ) to O(N3). This computational cost is prohibitive for systems with a large number of atoms. One can use a coarser approximation with the use of a potential function.

Empirical potentials

Empirical (or parametric) potentials are functions F of the atomic positions r1 , . . . , rNa . They are meant to avoid the computational cost of electronic structure methods and are gener-ally less accurate. The simplest potentials only consider pairwise interactions and are called pair-potential. For example, the widely used Lennard-Jones potential [Jones, 1924] is a pair-potential. Its expression is FLJ(r1, . . . rNa ) = 4 Na σ ! 12 σ ! 6 where rij = kri − rjk is the distance between atoms i and j, is a depth parameter, and σ is the distance at which the potential crosses zero. The attractive term proportional to 1/r6 in the potential comes from the Van-der-Waals forces’ scaling.

Table des matières

1 Introduction

1.1 Curse of dimensionality in supervised learning

1.2 Local methods

1.2.1 Local methods in physics

1.2.2 Local methods in image classification

1.2.3 Convolutional neural networks

1.2.4 Local methods’ efficiency for image classification and energy regression

1.3 Image classification

1.3.1 Patch-based image classification

1.3.2 Convolutional neural networks

1.3.3 Patch-based convolutional neural networks

1.4 Energy regression in physics

1.4.1 Potential energy surface

1.4.2 Empirical potentials

1.4.3 Machine learning potentials

1.5 Contributions in energy regression in physics

1.5.1 Efficiency of local methods for energy regression

1.5.2 A local method for vibrational entropy regression

1.6 Contributions in image classification

1.6.1 A structured CNN for patch-based image classification

1.6.2 Image classification with patches K-nearest-neighbors

1.7 Convolutional neural networks for artistic creation

1.7.1 Dialog on a canvas with a machine

1.7.2 Neural style transfer with artists

2 Efficiency of local methods for energy regression

2.1 Continuous Solid Harmonic Scattering Transform

2.1.1 Gaussian density representation

2.1.2 Solid harmonic wavelets

2.1.3 Continuous functional operator U

2.1.4 Examples

2.1.5 Continuous scattering coefficients

2.2 Discrete solid harmonic scattering transform

2.2.1 Zero-order densities with multiple Gaussians

2.2.2 First-order densities with a single Gaussian

2.2.3 First-order densities with multiple Gaussians

2.3 Numerical experiments on the QM9 database

2.3.1 Solid Harmonic Scattering Transform parameters

2.3.2 Multi-linear regression

2.4 Graphite database with long-range energies

2.4.1 About graphite

2.4.2 Generating configurations

2.4.3 Energy computations

2.4.4 Cross-validation folds

2.5 Spatial separation based on local SOAP descriptors

2.5.1 Local SOAP descriptor

2.5.2 Spatial separation method

2.6 Regression results

2.6.1 Parameters of solid harmonic scattering representation

2.6.2 Results

2.6.3 Results with two SOAP and a pairwise potential

2.6.4 Discussion

3 A local method for vibrational entropy regression

3.1 Physical background

3.2 Vibrational entropy in the harmonic approximation

3.3 Configuration database

3.3.1 Generating configurations

3.3.2 Computing vibrational entropies

3.4 Regression of the vibrational entropy

3.4.1 Permutation invariance and short-range separation

3.4.2 Local AFS descriptor

3.4.3 Solid harmonic scattering descriptor

3.4.4 Linear regression

3.5 Results

3.5.1 Influence of interatomic potential and descriptor set

3.5.2 Modeling datasets with multiple defect species and variable supercell volume

3.5.3 Training on combined disordered and crystalline datasets

3.5.4 Transferability of the crystalline model to disordered structures

3.6 Discussion

4 Structured patch-based convolutional neural network for image classification

4.1 Scattering Transform descriptor of patches

4.2 Classification with patch separation

4.2.1 Supervised local encoding of Scattering

4.2.2 Patch separation

4.3 Image Classification on ImageNet

4.4 Discussion

5 Image classification with patches K-nearest-neighbors

5.1 Convolutional Kernel Methods

5.2 Method

5.3 Experiments

5.3.1 CIFAR-10

5.3.2 ImageNet

5.3.3 Dictionary structure

5.4 Discussion

6 Creativity in human-machine artistic interaction

6.1 Dialog on a canvas with a machine

6.1.1 Creation Process

6.1.2 Installation and Specifications

6.1.3 Fostering Creativity

6.1.4 Human and Machine Interplay

6.2 Interactive neural style transfer with artists

6.2.1 Introduction

6.2.2 Evaluating Neural Style Transfer Methods

6.2.3 Quantitative evaluation

6.2.4 Instability phenomena

6.2.5 Interactive Portrait Painting Experiments

6.2.6 Computational catalyst in the interaction

6.3 Discussion

7 Conclusion

7.1 Summary of findings

7.2 Future perspectives

A Solid harmonic scattering transform

A.1 Solid harmonic wavelets normalization

A.2 Fourier transform of solid harmonic wavelets

A.3 Covariance of the operator U to rotations

A.4 Examples of Ul,j []

A.4.1 Single gaussian

A.4.2 Multiple Gaussians

B Patches K-nearest-neighbors image classifier

B.1 Mahanalobis distance and whitening

B.2 Implementation of the patches K-nearest-neighbors encoding

B.3 Intrinsic dimension estimate

Bibliography