In an era when more content is available electronically every day, it has become harder for consumers to find interesting media such as books, movies, TV shows, songs and textual information. The shear volume of information is overwhelming and provides the user with an infinite number of content possibilities. Navigating the sea of information without any personalization makes it harder to find the relevant content when thousands of results are involved. Now this may seem mundane when the purpose is for leisure, but companies are failing to provide the right content to their user without any personalization mechanisms, and this might represent an important revenue loss. At the other end of the spectrum, without a personalized recommender system, researchers might not be able to find the relevant information in regards to their research. Due to the aforementioned examples, this led to the creation of hyper personalized recommender systems in various industries. These systems are based on users’ personal profile like tastes, past history and behaviours to provide a subset of tailored content. They are omnipresent and represent an integral aspect of popular products and services consumed by everyday users. They learn from different signals to provide a small subset of results that are highly personalized.

Moreover, these systems also improve catalog discoverability, serendipity and complement searching. Netflix estimates that 75% of new content consumed by their users is through a recommendation algorithm (Amatriain & Basilico, 2012), while 60% of YouTube videos accessed from the homepage comes from a recommender system (Davidson et al., 2010). On popular e-commerce platforms like Amazon, users can navigate through different suggestions based on a combination of their own purchase history and other’s similar history to find the best deal and narrow down the best items to buy next (Linden et al., 2003). Companies also benefit by investing in recommender systems to provide a better user experience and increase their profit margins. At Amazon, 35% of their revenue comes from personalized recommendations (MacKenzie et al., 2013), while Netflix stated that they saved more than 1$B per year due to these systems (Gomez-Uribe & Hunt, 2015). At Spotify, a popular music streaming service, they evaluate the impact of their recommender system to have helped increase their monthly users from 75 million to 100 million (Leonard, 2016).

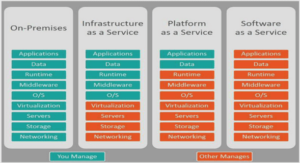

These companies are able to attract many users and reach their financial goals with these hyper-personalized systems. They also collect a considerable amount of information about their users such as content preferences, usage patterns and interactions to update and provide a more tailored experience. Two types of data are usually used when creating a recommendation algorithm. The first type can be a binary or discreet value representing the interaction between a user and the items. In the literature, this type of data is often called « implicit interaction » and generally represents an action of a user (e.g., a click, a view, an item bought. . . ). The second type is denoted as « explicit interaction » and is usually correlated with the « interest » (e.g., an ordinal scale denoting satisfaction). Along with these two types of data, metadata representing the content (e.g., the title of a movie, the content of a book, the values of each pixel in an image . . . ) can also be used as an additional source of knowledge in a recommender system. In this thesis, we denote three types of algorithms. The first is collaborative filtering (CF) which creates neighbourhoods of similar data. The second is content-based filtering (CBF) which consider similarities between contents. And lastly, hybrid methods (HB) which combines two or more recommendations or use an expressive model to encode high-dimensional information. Each of these techniques are described in the next chapter along with the two data types.

In recent years, tackling problems with machine learning (ML) has become increasingly popular due to the availability of data and graphical processing units (GPU). This enables practitioners and researchers to train deep architectures, a technique also known as Deep Learning (DL). Such techniques are especially promising since they can automatically extract significant features from unstructured data like images, texts and sounds. This removes the need for handcrafted features, and enhances traditional recommender models like the latent-factor model or a similarity-based one (Oord et al., 2013; Wang et al., 2014). In more recent work like Tan et al. (2016), the authors exclusively use a Recurrent Neural Network to encode the temporality found in a user’s sessions or in (Liang et al., 2018a), where the authors encode the implicit interactions in a latent model called Variational Auto-Encoder (VAE) to provide recommendations.

Collaborative Filtering

Collaborative Filtering (CF) is one of the most widely used algorithms in the industry due to its wide range of possible applications, from e-commerce websites to social media platforms (Chen et al., 2018). Traditional CF algorithms use the relationships between the users and their items in order to identify new user-item associations. This is done by considering the user explicit or implicit interactions.

Memory-based CF

Memory-based methods consider the relationships between the users and the items. It creates neighbourhoods that share similar information like similar history for users, or similar metadata for items. It then finds the nearest neighbours, predicts their correlation and provides an ordered list of inter-similarity. Memory-based CF can besubdivided into two more methods: user-based CF and item-based CF .

Model-based CF

Memory-based CF algorithms are easy to understand and simple to implement but are not well suited for large datasets. This is because of the computation of rˆ that uses the entire database every time to make a prediction which is memory inefficient, not very scalable and thus can be considerably slow. Unlike the previous technology, model-based CF algorithms usually requires a learning phase that extract information from the dataset to create a “model”. This is used to make a prediction without the need to use the entirety of the database every time. Learning a prior model makes prediction quickly and more scalable for real-time recommendations.

Among the techniques available, latent-factor models are very popular and provide competitive results Chen et al. (2018). Latent-factor models factorize the implicit rating matrix into two low-ranked matrices: the user and item features. To produce these low-ranked matrices, matrix factorization (MF) techniques like Alternative Least Square (ALS) (Hu et al., 2008) or optimization via Stochastic Gradient Descent (SGD) (Jorge et al., 2017) are generally used as well as deep learning techniques (Zhang et al., 2019).

Challenges

In standard scenarios where the users or the items have sufficient interactions, CF-based techniques usually perform well and provide diverse and interesting recommendations. These models require users to have at least one interaction, otherwise CF cannot come up with recommendations. Furthermore, most systems require a minimal number of interactions to perform well. This scenario is called the “cold-start” problem. As discussed previously, when the number of users and items increases, the scale of the data also increases exponentially. The sparsity of the data can become challenging and in some cases it’s a problem depending on the algorithm used. CF is based on the assumption that users with similar tastes or preferences share similar interaction histories, and can become inaccurate if there are too many users with rare or unique tastes. This unique preference problem is called the “grey sheep” issue.

Content-Based Filtering

Unlike CF methods, which make neighbourhoods of common tastes and preferences only based on the interaction histories, content-based filtering (CBF) makes recommendations based on the metadata of the items. Nowadays, most systems contain some form of prior knowledge on the items. In an e-commerce setting, the metadata includes the item title, description, brand, categories, while on a music streaming platform, metadata can be the song name, artist, album, genre and release date.

Most of the CBF systems use the available information on the items, along with some engineered features to provide the final recommendation. For example, in a music recommendation system, some might analyze the audio files to determine features such as loudness, tempo and timbre (O’Bryant, 2017). Typical CBF systems consist of three different parts: feature extractions, user profiles creation and recommendations.

INTRODUCTION |